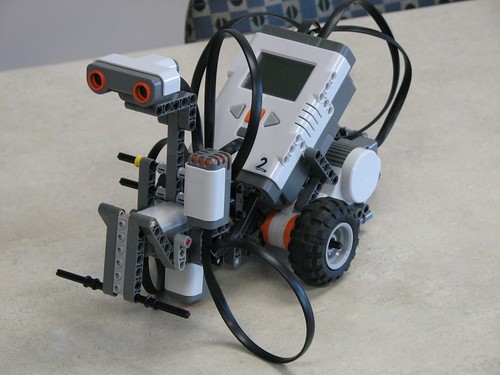

“Robot” (CC BY-SA 2.0) by mutednarayan

Within the science fiction genre, a familiar trope is the automaton that works with perfect logic but fails to grasp the subtle nuances of human emotion. It’s this lack of empathy that has also created the feeling that when robots reach a certain level of sophistication, they could take over human society without any pulls on a non-existent conscience.

However, there are signs that real progress is being made in terms of the development of AI applications capable of emotion. One example can be seen in online bingo games that rely on complex algorithms to replicate the randomness of the real game. They use random number generators to do this, but soon, the sites themselves will actually be able to share in the emotion of winning with their players. This will mean that they will reflect the player’s own excitement and pleasure if they win. In doing so, it will make the experience of playing online bingo more emotionally involved for the person playing. They may also feel more included in a community of users when their emotion is reflected back to them in this way.

This is one of the more basic applications. After all, it is a relatively simple connection for a machine to make that, when someone wins, it will raise their mood. But other developments in the field are becoming considerably more sophisticated.

The key to this is creating machines that can take more subtle cues from their interactions with people; these can be received in a number of ways. One of the most powerful is in the way that the human voice is used. A great deal of research is being carried out into creating automated call and answering machines that can judge a person’s mood by the tone of their voice and the language that they’re using.

One Canadian company called Lyrebird has been a leader in developing an automated voice system that can clone a voice and feed back a similar-sounding response, thus creating an immediate emotional connection.

“Source code security plugin” (CC BY-SA 2.0) by Christiaan Colen

Another important way in which AI is being developed to detect and reflect emotions is through facial recognition. It’s long been a recognised fact that it’s the body language we use, and the facial expressions that we pull, that most accurately reflect what we are feeling inside.

Back in 2016, Apple acquired the start-up Emotient to bring some of this expertise on board, and it’s believed that other internet companies like Google, Facebook, and Amazon are also actively investigating how to use this kind of technology to gain a more profound understanding of their users. How exactly their own systems will then be adapted to respond to the emotional cues detected remains to be seen.

However, the progress made so far encapsulates AIs ability to identify and mimic human emotion, not to feel emotion itself. Whether robots will ever have the capacity to emote in the same way that we do is still a topic that is under much research and discussion.

“Devoted organizer. Incurable thinker. Explorer. Tv junkie. Travel buff. Troublemaker.”